‘It’s mostly good people making bad decisions who join violent extremist groups.’ — Yasmin Green

I think this is so important that I am reposting this Wired Business Conference summary by Emily Dreyfuss originally titled “Hacking Online Hate Means Talking to the Humans Behind It”. Alphabet appears to be investing significant resources to fixing the dark side of the Internet.

Yasmin Green leads a team at Google’s parent company with an audacious goal: solving the thorniest geopolitical problems that emerge online. Jigsaw, where she is the head of research and development, is a think tank within Alphabet tasked with fighting the unintended unsavory consequences of technological progress. Green’s radical strategy for tackling the dark side of the web? Talk directly to the humans behind it.

That means listening to fake news creators, jihadis, and cyber bullies so that she and her team can understand their motivations, processes, and goals. “We look at censorship, cybersecurity, cyberattacks, ISIS—everything the creators of the internet did not imagine the internet would be used for,” Green said today at WIRED’s 2017 Business Conference in New York.

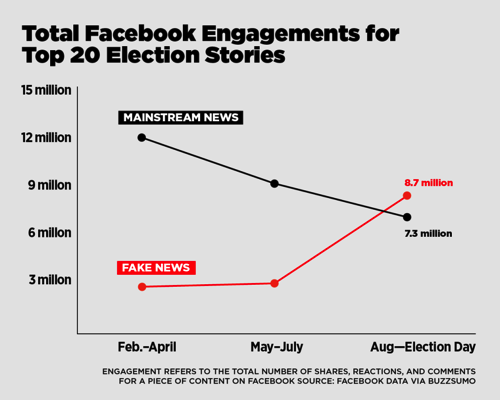

Last week, Green traveled to Macedonia to meet with peddlers of fake news, those click-hungry opportunists who had such a sway over the 2016 presidential election in the US. Her goal was to understand the business model of fake news dissemination so that she and her team can create algorithms to identify the process and disrupt it. She learned that these content farms utilize social media and online advertising—the same tools used by legit online publishers. “[The problem of fake news] starts off in a way that algorithms should be able to detect,” she said. Her team is now working on a tool that could be shared across Google as well as competing platforms like Facebook and Twitter to thwart that system.

Along with fake news, Jigsaw is intensely focused on combatting online pro-terror propaganda. Last year, Green and her team travelled to Iraq to speak directly to ex-ISIS recruits. The conversations led to a tool called the Redirect Method, which uses machine learning detect extremist sympathies based on search patterns. Once detected, the Redirect Method serves these users videos that show the ugly side of ISIS—a counternarrative to the allure of the ideology. At the point that they are buying a ticket to join the caliphate, she said, it was too late.

“It’s mostly good people making bad decisions who join violent extremist groups,” Green says. “So the job was: let’s respect that these people are not evil and they are buying into something and lets use the power of targeted advertising to reach them, the people who are sympathetic but not sold.”

Since its launch last year, 300,000 people have watched videos served up by the Redirect Method—a total of more than half a million minutes, Green said.

Beyond fake news and extremism, Green’s team has also created a tool to target toxic speech in comment sections on news organizations’ sites. They created Perspective, a machine-learning algorithm that uses context and sentiment training to detect potential online harassment and alert moderators to the problem. The beta version is being used by the likes of the New York Times. But as Green explained, it’s a constantly evolving tool. One potential worry is that it could be itself biased against certain words, ideas, even tones of speech. To counteract that risk, Jigsaw decided not to open up the API to allow others to set the parameters themselves, fearing that an authoritarian regime might use the tool for full-on censorship.

“We have to take measures to keep these tools from being misused,” she said. Just like the internet itself, which has been used in destructive ways its creators could never have imagined, Green is aware that the solutions her team creates could also be abused. That risk is always on her mind, she says. But it’s not a reason to stop trying.

My personal view is that Facebook is by far the bigger problem. I don’t expect much action by Facebook because their #1 incentive is to keep people on the site and feed the ads. Fake news and anger-inducing content do just that. I sincerely hope I’m wrong about that.